Optimizing Static Websites with Next.js for PageSpeed and SEO Success

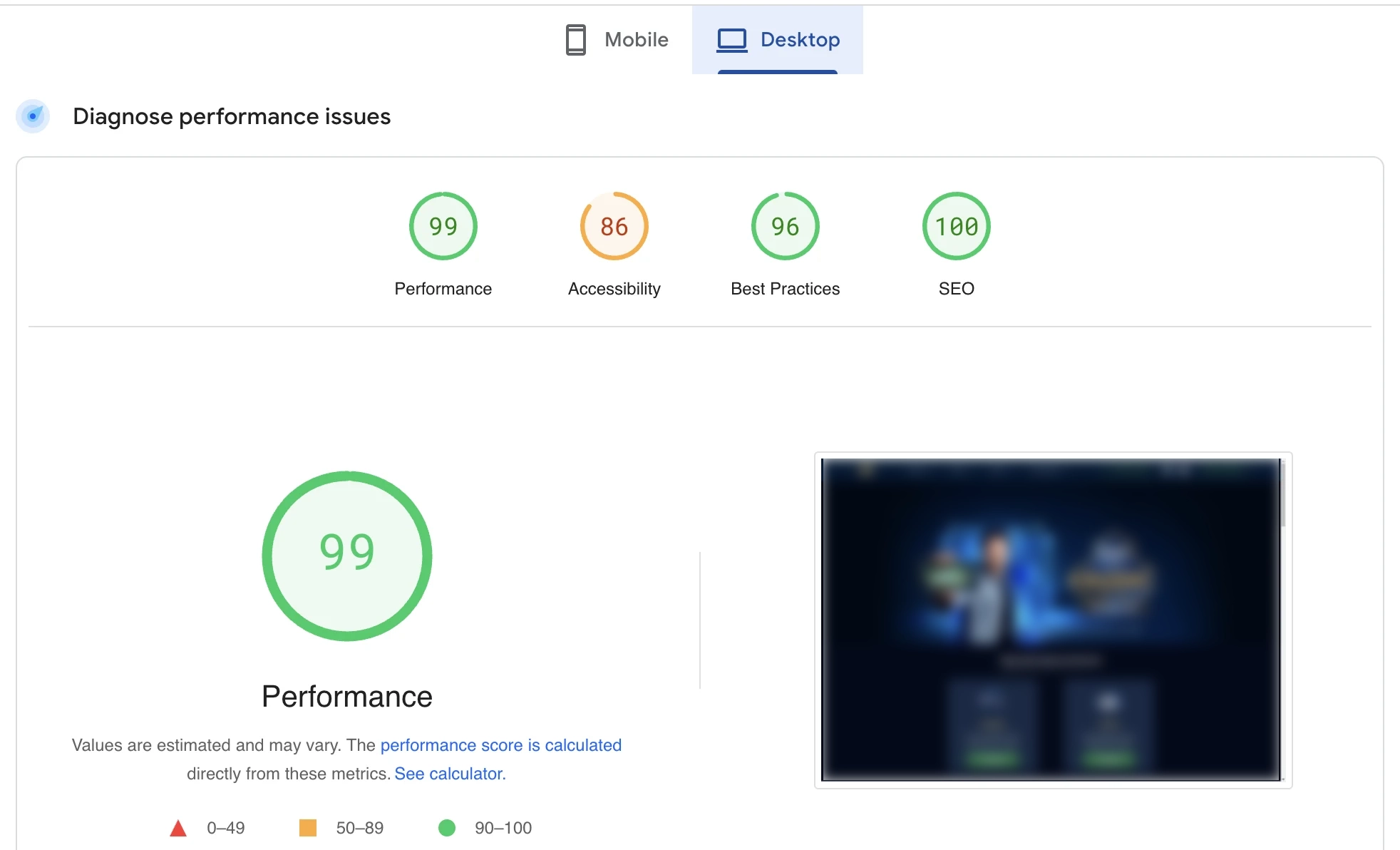

In today's digital landscape, achieving high PageSpeed scores and strong search engine visibility is essential for online success. By implementing industry best practices, we successfully optimized our Next.js static website hosted on AWS — achieving green scores on Google PageSpeed and securing a prominent position in Google search results.

Here are the key implementations carried out at Head Digital Works.

1. Choosing Next.js for Static Site Generation (SSG)?

2. Hosting on Amazon S3 for Speed and Reliability

3. Key Performance Optimization Techniques

4. Impact of Source Code View and Browser Network Tab Preview?

5. PageSpeed Insights: Key Metrics to Monitor?

6. SEO Strategies for First-Page Ranking?

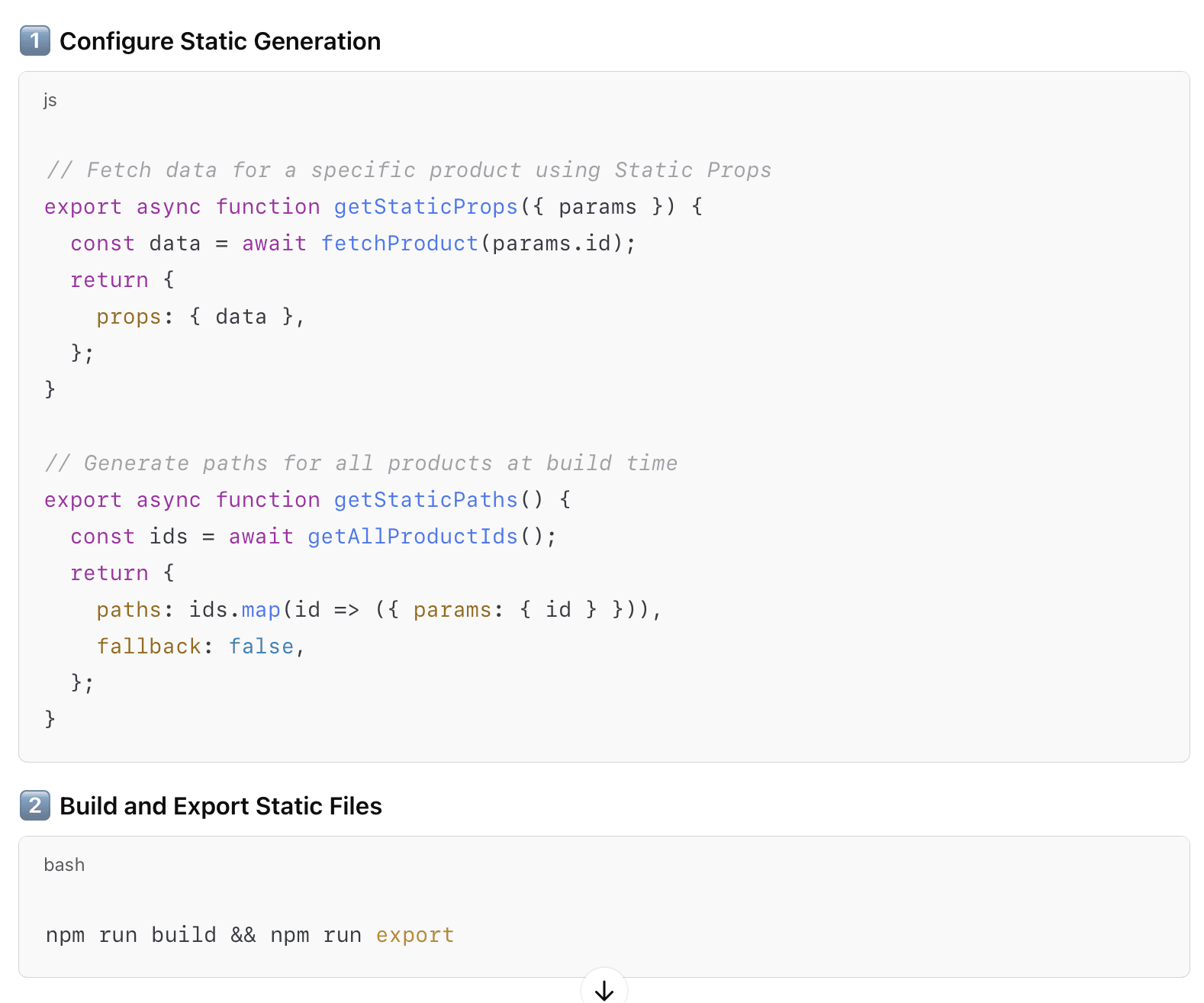

1. Choosing Next.js for Static Site Generation (SSG)

Next.js provides built-in static site generation (SSG), ensuring faster load times. By pre-rendering pages at build time, the website loads instantly without server-side processing, leading to improved performance and SEO benefits.

- Performance Optimization:

To enhance speed, reduce server load, and ensure maximum scalability, we adopted Static Site Generation (SSG) using Next.js. During the build process, pages are pre-rendered into static HTML, which are then deployed to an S3 bucket and served globally via CloudFront CDN. This means instead of generating pages dynamically on each request, the CDN delivers pre-built HTML instantly from its nearest edge location. The result: significantly faster load times, no runtime rendering, and reduced backend pressure.

- SEO Benefits:

By generating fully pre-rendered pages at build time, we improve SEO by allowing search engines to easily crawl and index content. Faster page loads positively impact Core Web Vitals, which contributes directly to improved search rankings.

- Cost Efficiency:

Without any backend server, we reduced infrastructure costs by deploying static files to AWS. We configured Next.js with

output: 'export', which generates static HTML, CSS, and JS files. These are stored in S3 and served via CloudFront — providing low-latency delivery while eliminating compute overhead.

- Image Optimization:

Built-in

next/imageoptimization automatically resizes and compresses images at build or request time, enhancing page load speeds without sacrificing image quality. - Automatic Code Splitting:

Next.js provides automatic code splitting by default. Each page only loads the JS it needs, reducing initial bundle size and improving performance. When users navigate to other routes, only the additional required code is fetched — enabling smooth, fast transitions.

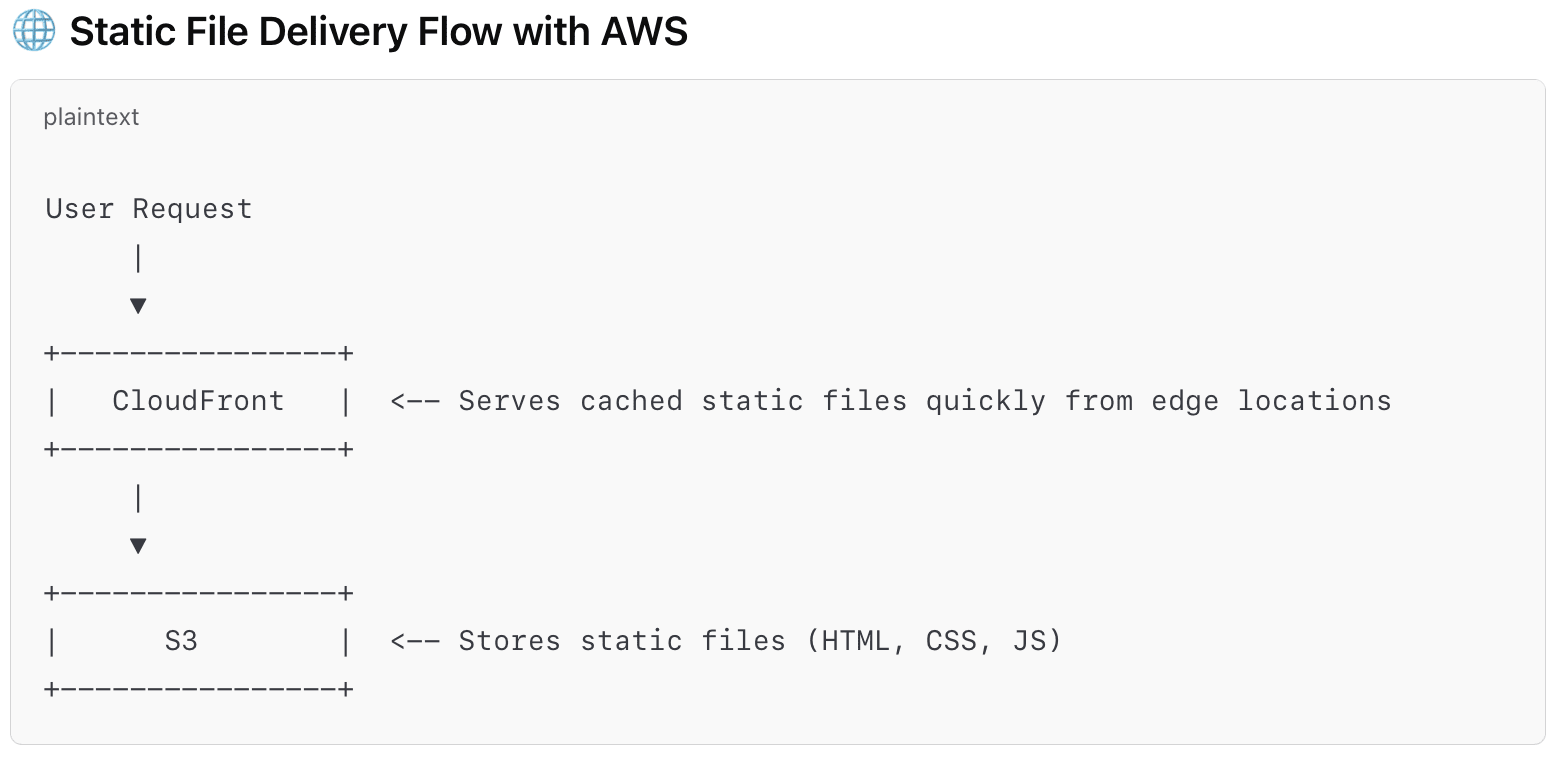

2. Hosting on Amazon S3 for Speed and Reliability

Hosting our static files on AWS provides speed and reliability by leveraging S3 for high availability and scalability. CloudFront ensures low latency via global edge caching. This setup is also cost-efficient, requiring minimal maintenance while offering fast, consistent delivery.

3. Key Performance Optimization Techniques

To achieve green scores on Google PageSpeed Insights, we implemented the following:

- Image Optimization

- Converted images to modern formats like WebP.

- Compressed using TinyPNG before uploading.

- Stored optimized images on a CDN for delivery.

- Used Next.js

next/imagefor lazy loading and auto optimization.

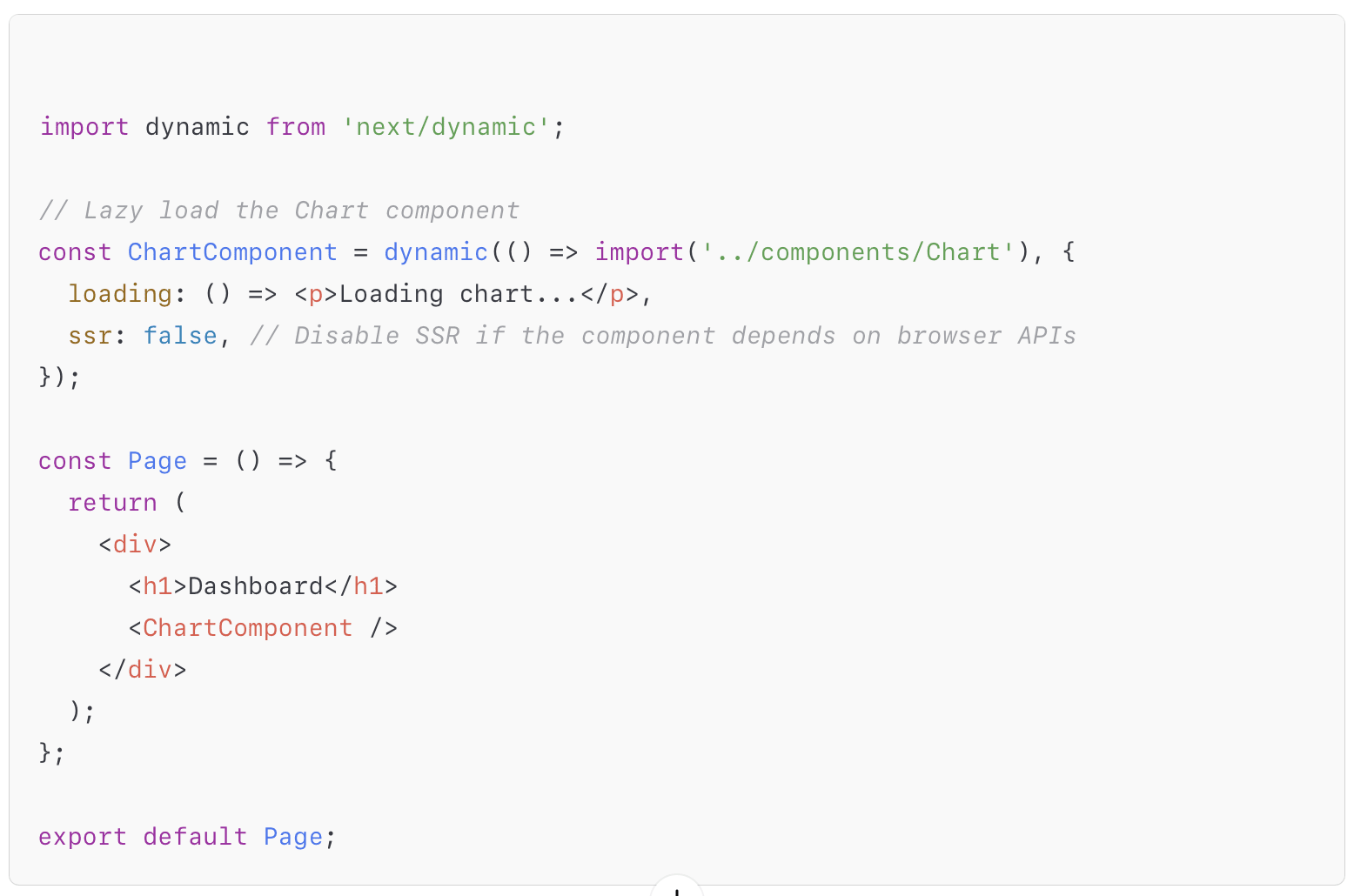

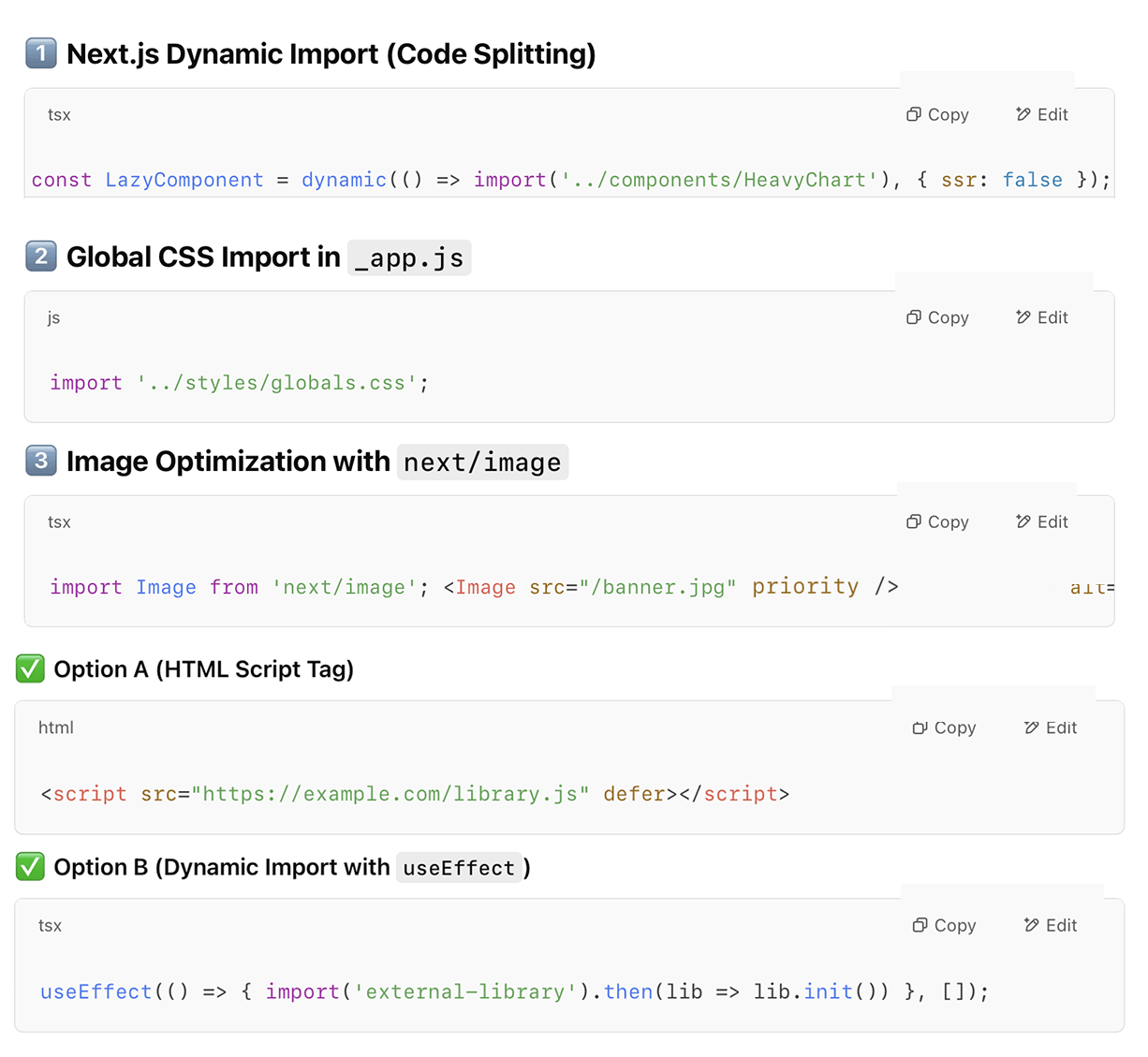

- Code Splitting and Lazy Loading

We used

next/dynamicto split and lazy load components. Non-critical UI (modals, charts, etc.) are only loaded when needed, reducing the initial JS payload and speeding up first paint.

- Efficient Caching and CDN Usage

AWS S3 + CloudFront serve static assets with optimal caching strategies. We use hashed filenames (e.g.,

home.ab3d4f.js) to enable automatic cache busting. HTML updates trigger CloudFront invalidation only when necessary, reducing overhead and maintaining freshness at the edge. - Minimizing Render-Blocking Resources

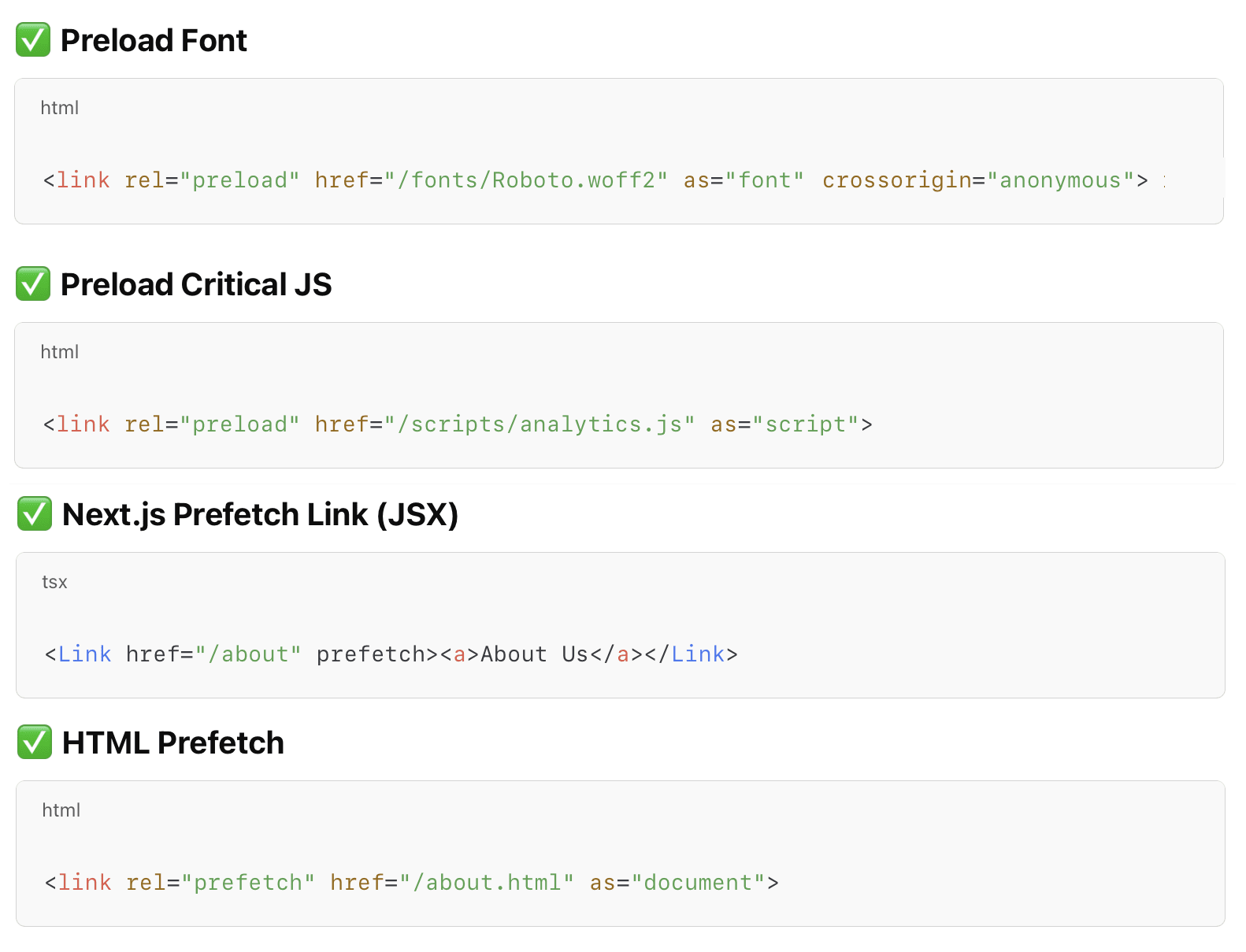

We used

rel="preload"for fonts and scripts needed early andrel="prefetch"for assets needed later. Non-essential JS was deferred or dynamically imported. These techniques improved perceived and actual load time, especially on mobile and slower networks.

- Fixing Broken Links

- Scanned with Google Search Console and Screaming Frog SEO Spider

- Ensured internal/external links are valid and updated

- Used 301 redirects for deprecated URLs

- Validated all links across site, social media, and sitemap

4. Impact of Source Code View and Browser Network Tab Preview

Google crawlers and performance auditing tools analyze how efficiently a website loads and renders. Ensuring a clean source code structure and optimized network requests contributes to better indexing and rankings:

a. Clean Source Code Structure

To maintain a clean and efficient codebase, we followed best practices for structuring the source code. We minimized the use of inline JavaScript and CSS, keeping scripts and styles modular and well-organized in separate files. This helped reduce code clutter and improved readability across the project.

We ensured that all components, pages, and utility functions followed consistent indentation and formatting guidelines, leveraging tools like Prettier and ESLint for auto-formatting and linting. Furthermore, we regularly removed unused variables, imports, and legacy code blocks, which improved build efficiency and reduced bundle size.

This approach not only made the codebase easier to maintain and scale, but also contributed to better performance and faster development cycles.

b. Optimizing Browser Network Requests

We focused on minimizing the number of HTTP requests made during page rendering. This was achieved by bundling and combining static assets such as JavaScript, CSS, and images wherever appropriate.

Using Next.js's built-in support for automatic code splitting and bundling, we ensured that only the necessary code for each page was served, eliminating redundant or duplicate requests.

Additionally, we leveraged modern build tools to consolidate styles and scripts into optimized bundles, minimizing round-trips and improving initial render speed. This significantly improved the efficiency of browser network requests and enhanced user experience, especially on slow connections.

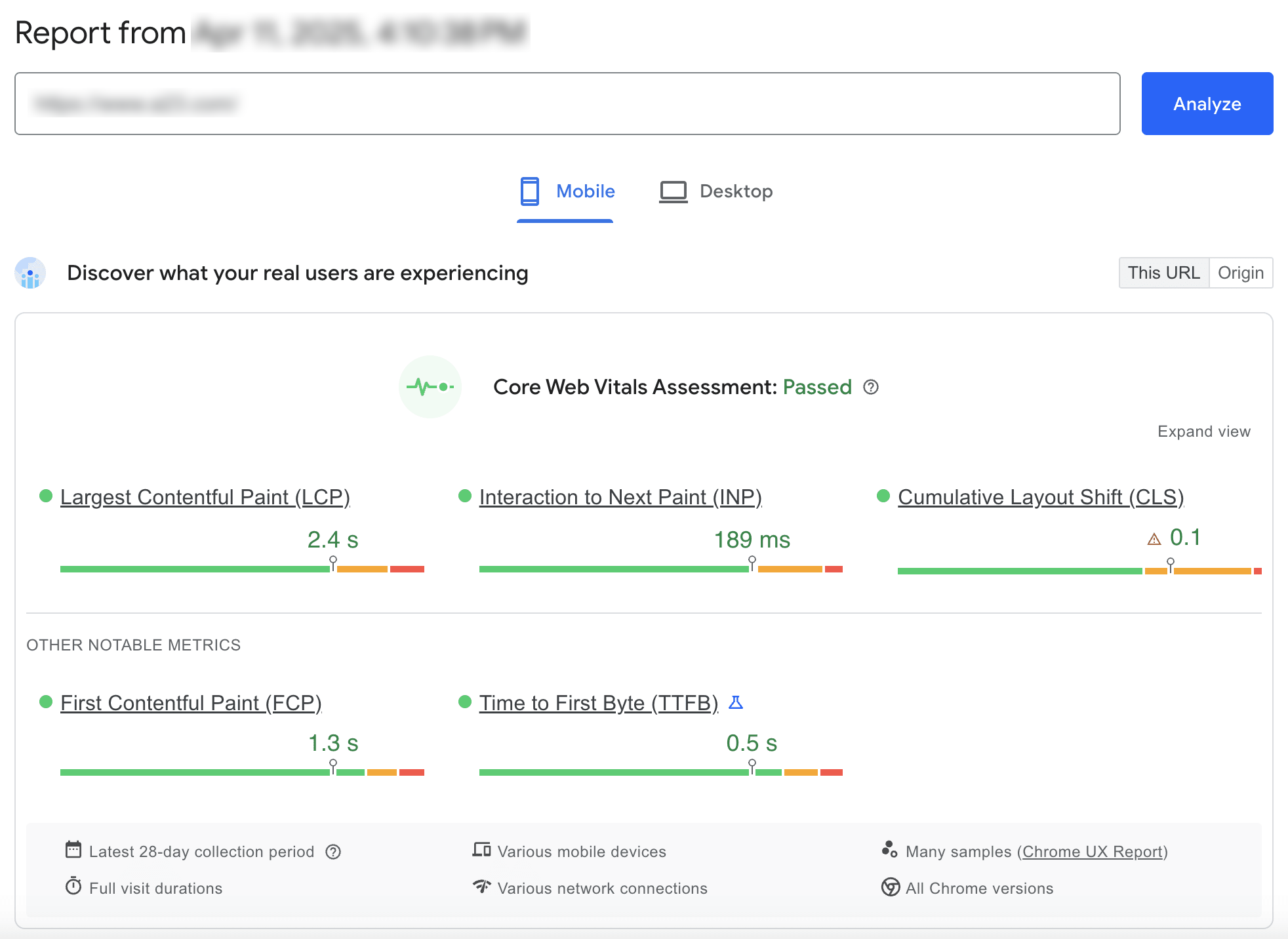

5. PageSpeed Insights: Key Metrics to Monitor

Google PageSpeed Insights evaluates website performance based on several critical metrics. Optimizing these ensures a smooth user experience and improved SEO rankings:

a. Largest Contentful Paint (LCP)

Improved speed and visibility of the largest above-the-fold content

🔍 Observations:

- The hero banner (largest visual element) was rendering late, heavily affecting LCP.

- Alice Carousel introduced extra JS weight and delayed rendering.

✅ Actions Taken:

- Replaced Alice Carousel with Embla Carousel, which has a significantly lighter footprint and better performance.

- Applied lazy loading with

loading="lazy"and priority hints for the banner image usingnext/image. - Used preload for the banner image and ensured it's rendered as early as possible in the DOM.

- Used appropriate image dimensions to avoid oversized image loads — removing extra/unnecessary dimensions reduced file size and improved image loading speed.

- Compressed and served images in next-gen formats (WebP) for better decoding and delivery.

📈 Impact:

- LCP improvement observed in both lab and field data (Lighthouse, Chrome UX Report).

- Faster perceived load of the hero section led to better user engagement.

b. Interaction to Next Paint (INP)

Improved responsiveness to user interactions

🔍 Observations:

- Heavy scripts and synchronous processing delayed interactivity, especially on mobile.

✅ Actions Taken:

- Removed unused JS and CSS to reduce payload.

- Deferred non-critical scripts to avoid blocking the main thread.

- Minimized expensive layout reflows and DOM manipulations.

- Adopted lightweight event handling techniques and asynchronous logic wherever possible.

📈 Impact:

- Smoother and quicker response on user actions (clicks, scrolls, nav).

- INP scores moved closer to the ideal threshold in Lighthouse audits.

c. Cumulative Layout Shift (CLS)

Stabilized layout to prevent unexpected visual movement

🔍 Observations:

- Layout shifts occurred due to images and dynamic elements without defined dimensions.

✅ Actions Taken:

- Added explicit width and height for all images and banners to reserve layout space.

- Gave explicit aspect ratio and min-height to each section and major parent elements to lock down layout structure.

- Controlled dynamic component injection to avoid pushing existing content.

- Used flexbox/grid layout to stabilize sections during content load.

- Avoided injecting ads, popups, or carousels without reserved space.

📈 Impact:

- No visual shifting during load, providing a seamless and professional user experience.

- CLS remained well below the ideal 0.1 mark across all viewports.

d. First Contentful Paint (FCP)

Enhanced how quickly users see initial content

🔍 Observations:

- Delay in rendering text and visuals due to large JavaScript payload, unused CSS, and render-blocking resources.

✅ Actions Taken:

- Enabled dynamic imports to defer non-critical JS and improve initial render path.

- Used code splitting to load only what's necessary per page.

- Improved server response by using Amazon S3 as a CDN to serve static assets globally.

- Removed unused CSS using tools and manual audit.

- Separated critical and non-critical CSS — inlined critical CSS within HTML to reduce blocking render time.

- Reduced TTFB by minimizing server-side logic before rendering.

📈 Impact:

- Faster time-to-first-paint gave users quicker visual feedback and reduced perceived load time.

e. Time to First Byte (TTFB)

Observed improvement in server response time as a side-effect of frontend optimization

🔍 Observations:

- While no specific effort was directed at optimizing TTFB, we noticed a natural improvement in TTFB metrics during performance audits.

- This was likely influenced by the reduction in page weight, efficient resource loading, and leaner frontend rendering logic.

✅ Indirect Optimizations That Helped:

- Removed unused CSS and JavaScript, reducing initial payload size.

- Prioritized critical content rendering, leading to faster delivery of meaningful bytes.

- Lazy loaded non-critical elements, shifting their processing after the initial page response.

📈 Impact:

- TTFB values improved across multiple tests, contributing to better FCP and overall page load time.

- Even without direct backend tuning, the frontend improvements had a positive ripple effect on response times.

6. SEO Strategies for First-Page Ranking?

Achieving strong visibility in Google search results required a well-structured SEO approach. Strengthened search visibility with technical and content-driven SEO enhancements.

To complement page speed optimization, we also worked on improving the discoverability and relevance of our website for search engines. These strategies were implemented with a focus on both technical SEO and user-first practices.

a. Structured Data & Metadata Optimization

- Integrated Open Graph and Twitter Card meta tags to improve link previews across social platforms, enhancing click-through rates.

- Leveraged

next/headin Next.js to dynamically set page titles, meta descriptions, and keywords based on page context — ensuring each page was individually optimized for SEO. - Maintained clean, concise meta content aligned with targeted search queries.

b. Semantic HTML & Accessibility

- Maintained a logical heading structure (

<h1>→<h2>→<h3>) to reflect content hierarchy, aiding both screen readers and SEO crawlers. - Added descriptive and keyword-rich alt attributes to all images to enhance image indexing and support accessibility.

- Added title to major element for making the web page crawler friendly.

- Ensured semantic use of HTML5 elements (

<article>,<section>,<nav>, etc.) for clarity and improved crawlability.

c. Sitemap & Robots.txt Configuration

- Created and submitted an XML sitemap to major search engines (Google, Bing) to ensure faster and deeper indexing of all relevant pages.

- Configured a robots.txt file to:

- Allow crawling of essential pages

- Block sensitive or irrelevant paths (like /api, /admin)

- Reduce crawl budget waste and prioritize key content

d. Schema Markup Integration

- Integrated multiple schema types to enhance SERP (Search Engine Result Page) visibility and eligibility for rich snippets:

- Organization - for branding and contact info

- Website - for homepage and search actions

- FAQPage - to appear in FAQ-rich results

- BreadcrumbList - for enhanced navigational clarity in SERPs

- VideoObject - for featured video listings

- Review / Testimonial - for social proof and star ratings

- SoftwareApplication - highlighting app features and platforms

Result: Increased visibility across various content types and improved the chance of appearing in rich search results.

Laid a solid technical SEO foundation to support long-term ranking and indexing performance.

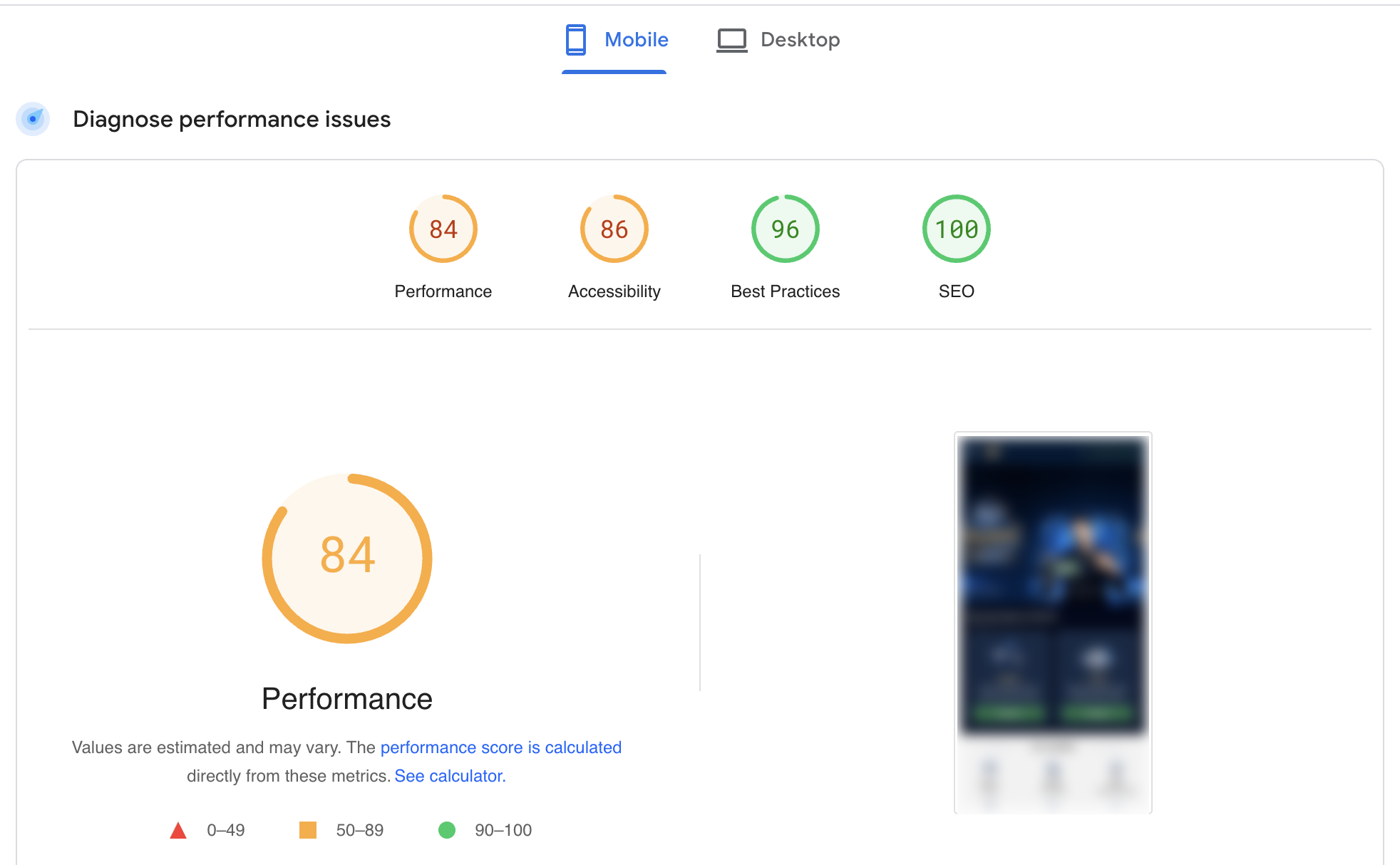

Final Results

With these optimizations, our Next.js static website consistently scored 90+ on Google PageSpeed Insights and gained prominent visibility within the top search results on Google. This demonstrates the impact of aligning performance tuning with effective SEO strategies in Next.js projects.

By following these steps, you can ensure that your Next.js website is optimized for both speed and search engine rankings, driving more traffic and enhancing user experience.